[et_pb_section fb_built=”1″ admin_label=”section” _builder_version=”3.0.47″][et_pb_row admin_label=”row” _builder_version=”3.0.48″ background_size=”initial” background_position=”top_left” background_repeat=”repeat”]

Big Data, Big Models, Big Promise

In the last few years, artificial intelligence (AI), has mostly been in the research realm. AI adoption is moving from labs and pilots into production. Businesses generally start on the path to AI adoption through pilots and looking at ways AI can help them supercharge their enterprise intelligence. Once a pilot is successful, companies will then deploy AI technologies across many production lines and production sites. AI is now able to ingest, sift through, categorize, and harness the power of the vast volumes of raw unstructured data and turn the information into optimized and actionable business processes through the use of machine learning (ML) and deep learning (DL).

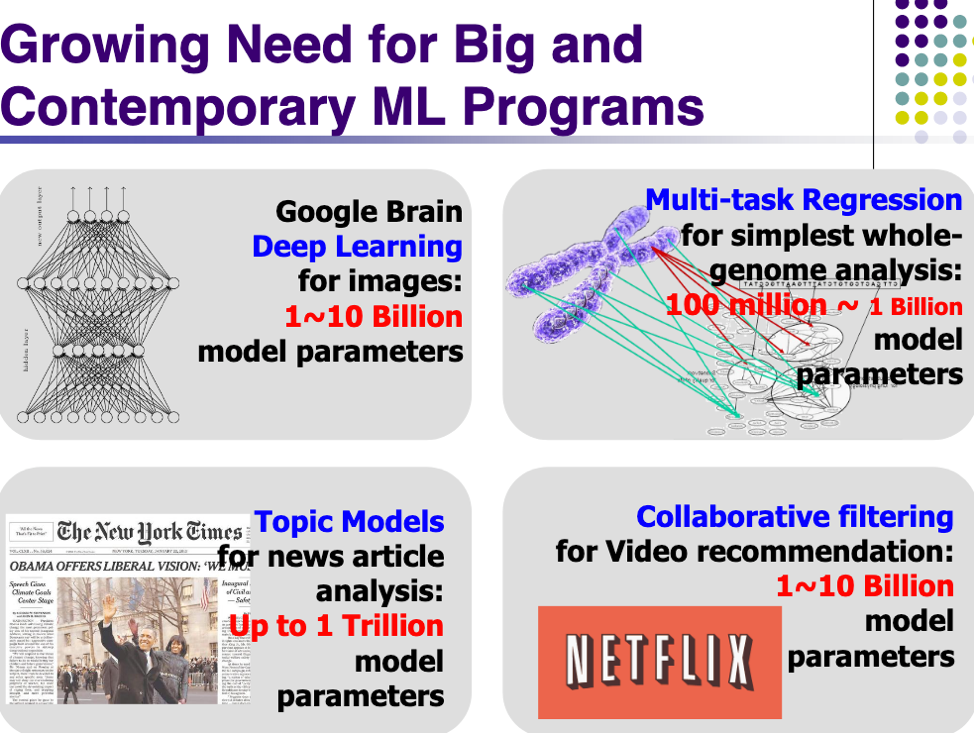

To power business AI, ML models now need to incorporate industrial-scale problems using Big Models (100s of billions of parameters) on Big Data (terabytes or petabytes). The explosion in data and the need for ML methods to scale beyond a single processing machine is driving a necessity for greater AI software and hardware harmony. Using and leveraging complex ML models for image recognition now require learning models with billions of parameters. Training large models with billions of parameters on large data sets is not feasible on a single machine or at least will take a few thousand years, give or take a few more 000’s, to complete.

Source: A New Look at the System, Algorithm and Theory Foundations of Distributed Machine Learning by Eric P. Xing and Qirong Ho

Hard and Soft Power for AI

Graphical Process Unit (GPU)

To tackle the issue of Big Data and Big Models, many industry experts have turned to the usage of graphical processing units (GPUs) to run DL and complex ML models. GPUs are specialized chips designed to handle intensive graphic and image processing. GPUs are composed of lots of (many times more than CPUs) smaller-sized logical cores. GPUs were initially used by many computer gamers as the graphical processing engine for visually complex games. GPUs have more computational units and memory bandwidth than traditional CPUs and are ideal for running and training highly complex ML and DL models. Many large-scale deep learning projects are now run on top of GPUs, which has been a huge improvement of hardware capabilities for AI processing.

Even with all the advancements in hardware and GPU processing power, it is in the software for AI that is essential to tackling the issue of Big Data and Big Models. Even with the power of a GPU, complex models can take weeks to train on a single GPU-equipped machine. To make DL and complex ML training a possibility in terms of time and resources, DL and ML models need to be scaled out and trained with multi CPU and GPU enabled clusters.

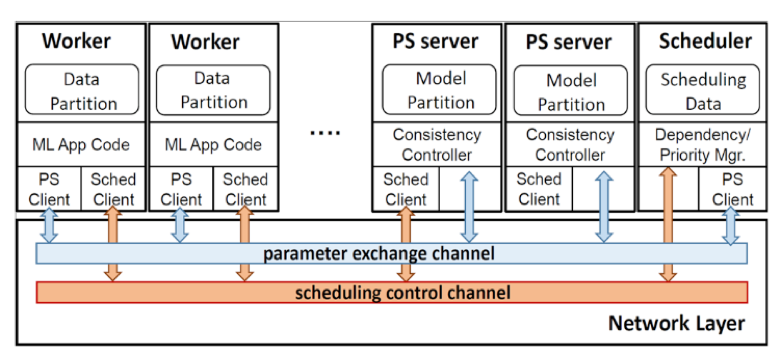

One way to handle this is through a distributed DL engine that can group together multiple CPUs, GPUs, laptops, or any other machines that can process data into an “AI supercomputer”.

Source: A New Look at the System, Algorithm and Theory Foundations of Distributed Machine Learning by Eric P. Xing and Qirong Ho

Software solutions for distributed implementation of DL and ML processing need to be able to handle substantial parameter synchronization across multiple machines. Well-architected software can effectively improve GPU and bandwidth utilization. Rescheduling and multi-threading computational and communication usage is the key to increased performances of distributed DL and complex ML on GPUs. If poorly designed and implemented the usage of multiple machines can actually be slower than a single machine to train DL and complex ML models.

Better Together

A well-designed AI software solution that can handle distributed DL and ML processing across multiple machines and the right kind of powerful hardware to handle DL and ML processing is a match made in AI heaven. This AI software and hardware power couple is a key driver in enabling and tearing down barriers to AI adoption across enterprises. It takes the computing hardware power of GPU and software architecture to take advantage of deploying AI in production successfully.